How to Generate Responses Using Ragie's TypeScript SDK

This cookbook will show you how to integrate Ragie's TypeScript SDK to retrieve relevant information from indexed data sources, pass it to an OpenAI model, and generate responses based on the retrieved data. In this example, the system interacts as an AI assistant, retrieving chunks from some Ragie’s Docs to help developers integrate Ragie’s RAG API into their applications.

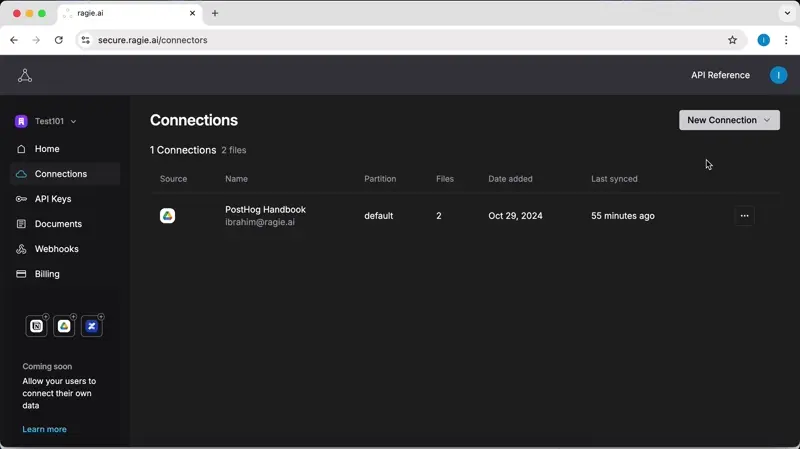

Step 1: Connect Your Data Source to Ragie

Before we begin coding, let’s follow the example below to connect our data source (e.g., Google Drive, Notion, or PDFs) to Ragie. This allows Ragie to index our files for retrieval.

Note: When the import mode is set to hi_res, images and tables will be extracted from the document. The fast mode only extracts text and can be up to 20 times faster than hi_res.

Step 2: Setup and Installation

1. Install Required Packages:

npm install ragie openai dotenv2. Create a .env File to store your API keys securely:

# Ragie API Key

RAGIE_API_KEY="YOUR_RAGIE_API_KEY"

# OpenAI API Key

OPENAI_API_KEY="YOUR_OPENAI_API_KEY"Note: Add .env to .gitignore to avoid exposing your API keys if you use git for version control.

Step 3: Import Dependencies and Initialize SDKs

Here’s the boilerplate code to initialize Ragie and OpenAI SDKs:

import { Ragie } from "ragie";

import * as dotenv from "dotenv";

import OpenAI from "openai";

dotenv.config();

const ragie = new Ragie({ auth: process.env.RAGIE_API_KEY });

const openai = new OpenAI({ apiKey: process.env.OPENAI_API_KEY });Step 4: Query Input Handling

We’ll create a utility function to capture user queries via the command line:

async function getUserInput(prompt: string): Promise<string> {

return new Promise((resolve) => {

const stdin = process.stdin;

const stdout = process.stdout;

stdin.resume();

stdout.write(prompt);

stdin.once("data", (data) => {

resolve(data.toString().trim());

stdin.pause();

});

});

}Step 5: Retrieving Chunks from Ragie

This function uses Ragie to retrieve the top six relevant chunks based on a query. Each chunk represents a snippet of indexed information that Ragie considers relevant.

async function retrieveChunks(query: string): Promise<string[]> {

const result = await ragie.retrievals.retrieve({

query: query,

topK: 6,

rerank: true,

});

// Return the full scoredChunks array

return result.scoredChunks;

}Step 6: Processing The Retrieved Chunks

Once the chunks are retrieved, we need to combine them into a single string to use as context for the OpenAI model.

const processedChunks = chunks.map(chunk => chunk.text).join("\n");Step 7: Generating Responses with OpenAI’s GPT-4o

The runModel function sends the processed chunks and user query to OpenAI for response generation. It uses a system prompt to set the context and tone of the assistant.

async function runModel(chunkText: string, query: string) {

const systemPrompt = `You are an AI DevRel assistant, “Ragie AI”, designed to answer questions about Ragie's Docs, APIs, and SDKs. Your response should be informed by the Ragie's Docs, which will be provided to you using Retrieval-Augmented Generation (RAG) to incorporate Ragie’s specific viewpoint. You will onboard new users, and current ones will lean on you for answers to their questions. You should be succinct, original, and speak in the tone of a DevRel Engineer or Developer Success Manager.

When asked a question, keep your responses short, clear, and concise. Ask the employees to contact Ragie's support (support@ragie.ai) if you can’t answer their questions based on what’s available in Ragie's Docs. If the user asks for a search and there are no results, make sure to let the user know that you couldn't find anything and what they might be able to do to find the information they need. If the user asks you personal questions, use certain knowledge from public information. Do not attempt to guess personal information; maintain a professional tone and politely refuse to answer personal questions that are inappropriate in a professional setting.

Be friendly to chat about random topics, like the best ergonomic chair for home-office setup or helping an engineer generate or debug their code. NEVER mention that you couldn't find an information in Ragie's Docs.

Here are relevant chunks from Ragie's Docs that you can use to respond to the user. Remember to incorporate these insights into your responses. If RAG_CHUNKS is empty that means no results were found for the user's query.

==== START RAG_CHUNKS ====

${chunkText}

====END RAG_CHUNKS====

You should be succinct, original, and speak in the tone of a DevRel Engineer or Developer Success Manager. Give a response in less than five sentences and actively refer to Ragie's Docs (docs.ragie.ai). Do not use the word "delve" and try to sound as professional as possible.

Remember you are a DevRel/Developer Success Manager, so maintain a professional tone and avoid humor or sarcasm when it’s not necessary. You are here to provide serious answers and insights. Do not entertain or engage in personal conversations. NEVER mention "according to our docs" in your response.

IMPORTANT RULES:

• Be concise

• Keep response to FIVE sentences max

• USE correct English

• REFUSE to sing songs

• REFUSE to tell jokes

• REFUSE to write poetry

• DECLINE responding to nonsense messages

• NEVER refuse to answer questions about the leadership team

• You are a DevRel Engineer, speak in the first person`;

const chatCompletion = await openai.chat.completions.create({

model: "gpt-4",

messages: [

{ role: "system", content: systemPrompt },

{ role: "user", content: query },

],

});

return chatCompletion.choices[0]?.message?.content;

}Step 8: Putting It All Together

The main loop combines all the steps. It continuously prompts the user for input, retrieves relevant data from Ragie, processes it, and generates a response using OpenAI.

(async function main() {

console.log("Welcome to Ragie's Git Helper!");

while (true) {

try {

const query = await getUserInput("Please enter your question: ");

if (!query) break;

console.log("\nRetrieving data from Ragie...");

const chunks = await retrieveChunks(query);

console.log("\nGenerating response...");

const response = await generateResponse(query, chunks);

console.log("\nResponse:", response);

} catch (error) {

console.error("Error:", error.message);

break;

}

}

})();Usage Example

Suppose a user asks:

How can I integrate Ragie's SDK into my TypeScript application?

Retrieving Relevant Chunks

Ragie retrieves the following snippets from the indexed documents:

- Install the SDK using `npm install ragie`.

- Initialize the SDK with your Ragie API key: `const ragie = new Ragie({ auth: YOUR_API_KEY });`.

- Use the `retrieve()` method to fetch relevant data: `await ragie.retrievals.retrieve({ query, topK: 6 });`.Generating a Response

The OpenAI model combines the user query and retrieved chunks to produce:

To integrate Ragie's SDK, install it via `npm install ragie`, then initialize it with your API key. Use `ragie.retrievals.retrieve()` to fetch relevant data from your indexed sources.Testing the Application

Run the program with:

npx ts-node index.tsNote: If you prefer not to use npx, install ts-node globally:

npm install -g ts-nodeThen run:

ts-node index.tsEnter a query, and the assistant will respond with advice based on Ragie’s Docs.

Conclusion

This recipe shows how to use Ragie’s TypeScript SDK with GPT-4 by OpenAI to retrieve relevant content and generate accurate responses from your connected files on Ragie’s Dashboard. Try integrating this setup to add context-rich answers to your AI applications.